David Slater

ABSTRACT

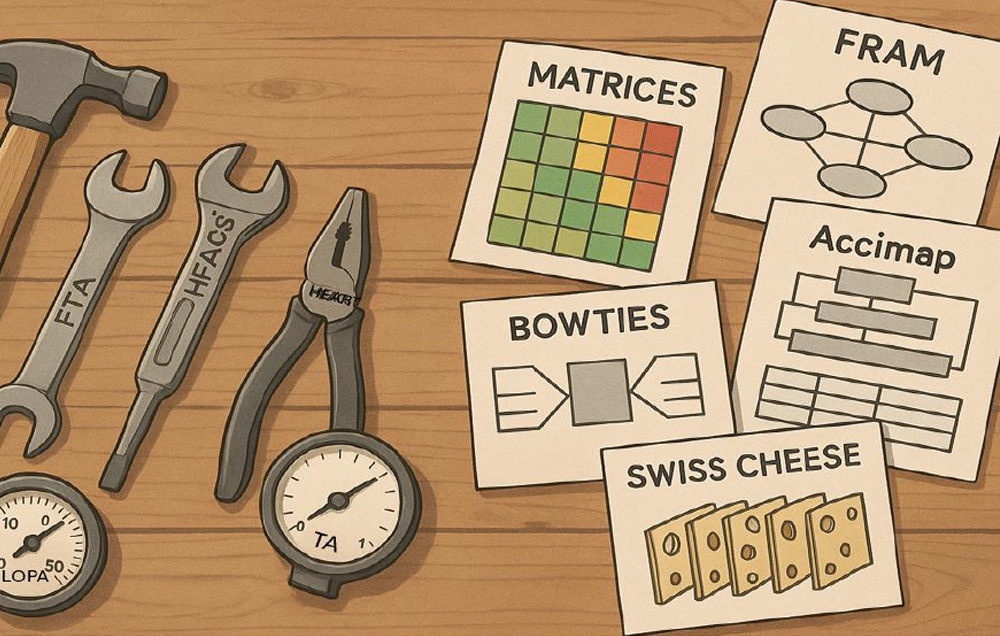

Safety science has undergone a steady evolution from the analysis of mechanical failure to the modelling of emergent behaviour in complex socio-technical systems. Early quantitative methods such as Fault Tree Analysis (FTA) and Probabilistic Risk Assessment (PRA) established the foundations of analytical rigour, but their deterministic assumptions limited their capacity to explain human and organisational performance. The subsequent development of Task Analysis, Human Reliability Analysis (HRA), and the Human Factors Analysis and Classification System (HFACS) extended the scope to human variability but retained a linear, reductionist logic.

The systems-thinking movement, beginning with Reason’s Swiss Cheese Model, Rasmussen’s AcciMap, and Leveson’s system-theoretic STAMP framework, introduced the ideas of feedback, hierarchy, and constraint. Hollnagel’s Functional Resonance Analysis Method (FRAM) completed this conceptual progression by modelling how variable functional interactions produce emergent outcomes. Together, these methods trace the transition from failure analysis to resilience analysis—from explaining what went wrong to understanding why things usually go right.

In modern safety assessment, static or purely qualitative tools such as Bow-Ties, risk matrices, and LOPA are no longer sufficient. The integration of the quantitative precision of FTA and HRA, the systemic structure of STAMP, and the dynamic variability modelling of FRAM—augmented by metadata and AI reasoning—offers a unified, predictive framework. This convergence of control logic and functional resonance defines the next stage of system safety science.

Keywords

System Safety; Fault Tree Analysis (FTA); STAMP; STPA; FRAM; Functional Resonance; Resilience Engineering; Human Reliability; Probabilistic Risk Assessment (PRA); Safety-II; Socio-Technical Systems; AI-Assisted Safety Modelling; Large Language Models (LLMs)