For estimating probabilities of outcomes in complex systems. | David Slater and Rees Hill

AN “INTELLIGENT” FMV, (FRAM MODEL VISUALISER) FOR ESTIMATING PROBABILITIES OF OUTCOMES IN COMPLEX SYSTEMS.

David Slater – dslater@cambrensis.org

Rees Hill – rees.hill@zerprize.co.nz

ABSTRACT

The Functional Resonance Analysis Method (FRAM) has emerged as a valuable tool for modeling and understanding the dynamic behaviour of complex socio-technical systems. While traditionally used as a qualitative method, recent advancements in the FRAM Model Visualizer (FMV) have introduced quantitative capabilities, enabling the systematic analysis of functional interactions and variability within a probabilistic framework. This paper explores the potential of FRAM to bridge the gap between human factors specialists, who prioritize qualitative insights, and engineers, who demand numerical rigour for system reliability and safety predictions.

Drawing parallels between FRAM functions and neural processes, we develop a “Neuron FRAM” model that integrates probabilistic aspects of function coupling, inspired by the McCulloch-Pitts neuron model. By representing FRAM functions as computational units capable of transmitting, coupling, and adapting probabilistic metadata, this approach facilitates predictive modeling of emergent system behavior under variable conditions. Demonstrations include the prediction of success and failure probabilities for safety-critical systems, showcasing its practical relevance in real-world applications such as healthcare, aviation, and AI systems.

The proposed framework highlights FRAM’s versatility as a system modelling methodology capable of quantifying emergent properties while maintaining its core focus on functional interactions and variability. This integration offers an innovative pathway to enhance the resilience, transparency, and reliability of complex systems, paving the way for broader adoption in domains that require both qualitative and quantitative insights.

Key words – Safety, Risk, Bow Ties, LOPA, FRAM, AI

INTRODUCTION

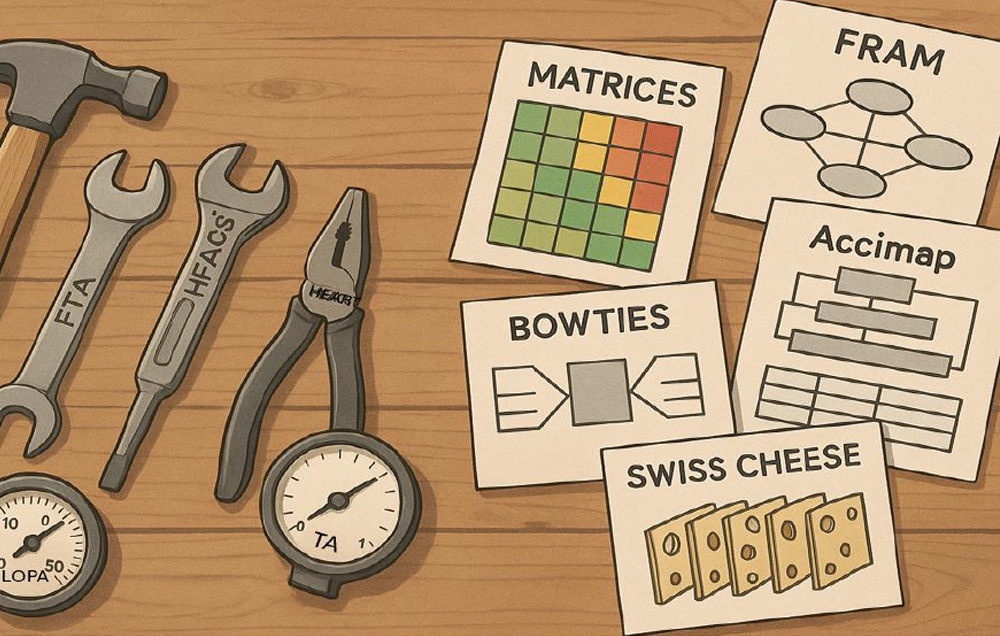

The Functional Resonance Analysis Method is being increasingly utilised by a wide variety of users for a range of applications. It is becoming of interest to safety professionals who have traditionally relied on methods such as Root Cause, Failure Modes and Effects and Bow ties to try to understand how modern engineering systems will behave in practice. But to this group, the need to quantify the potential failure frequencies is a major requirement. Traditionally this was done by a full Probabilistic (Quantitative) Risk Analysis (PRA/QRA) of the system. But in today’s cost conscious environment the resources needed are generally too scarce and expensive for routine applications so alternatives such as Layers of Protection Analysis (LOPA) and semi quantitative assessments such as Risk Matrices or Heat maps have to suffice. It’s

because none of these alternatives feel rigorous enough, that confidence in their predictions is not high among the professionals using them. It is for this reason that the FRAM approach now seems worth exploring.

To most users, the FRAM approach relies on producing a “picture” (a metamodel) of the complex systems involved, sufficiently rigorous to be able to trace and predict the effects of the interdependencies between the functions interacting in the system to produce the outcomes expected and unexpected. To the engineers this is more like a traditional HAZOP of Process and Instrumentation Diagrams (P&ID’s) to trace the systemic behaviour in variable real-life conditions of operation. Both find FRAM approach valid and very helpful (“as done”) safety “audits” of (“as imagined”) designs and procedures.

So for the mainly “human factors” users, quantification is not necessary and irrelevant, while to engineers, the lack of quantification disqualifies FRAM as a “serious” approach worth looking at. But recently the systematic rigour of modelling the details of the functional interactions that the FAM method enables, has been formalised to include the tracing of the properties and behaviour of individual functions as a process unfolds or develops, quantitatively. This feature is now incorporated in the software (the FRAM Model Visualiser, FMV), employed by most FRAM users.

While this feature is proving invaluable to academic research groups, particularly in Healthcare, aviation, and AI applications such as autonomous vehicles and robot surgery, the main body of users is as yet unaware, or sees no need for this feature, and are discouraged by the need to understand the full details, of yet another acronymed safety modelling method (lost in the alphabet soup).

But to any users of the FRAM approach, an ability to predict the system behaviour in ideal or under varying conditions is its main attraction. But an ability to communicate this is mainly based on conveying a visual understanding of what the FRAM models are implying. Having a more quantitative, consistent measure of these behaviours has obvious appeal, if only as relative ratings for scrutiny and comparison (what if?)

To achieve this acceptance, the basis of the quantification needs to be self-explanatory and acceptable as valid by a wide range of users, from Human factors specialists to engineers.

Click to download PDF